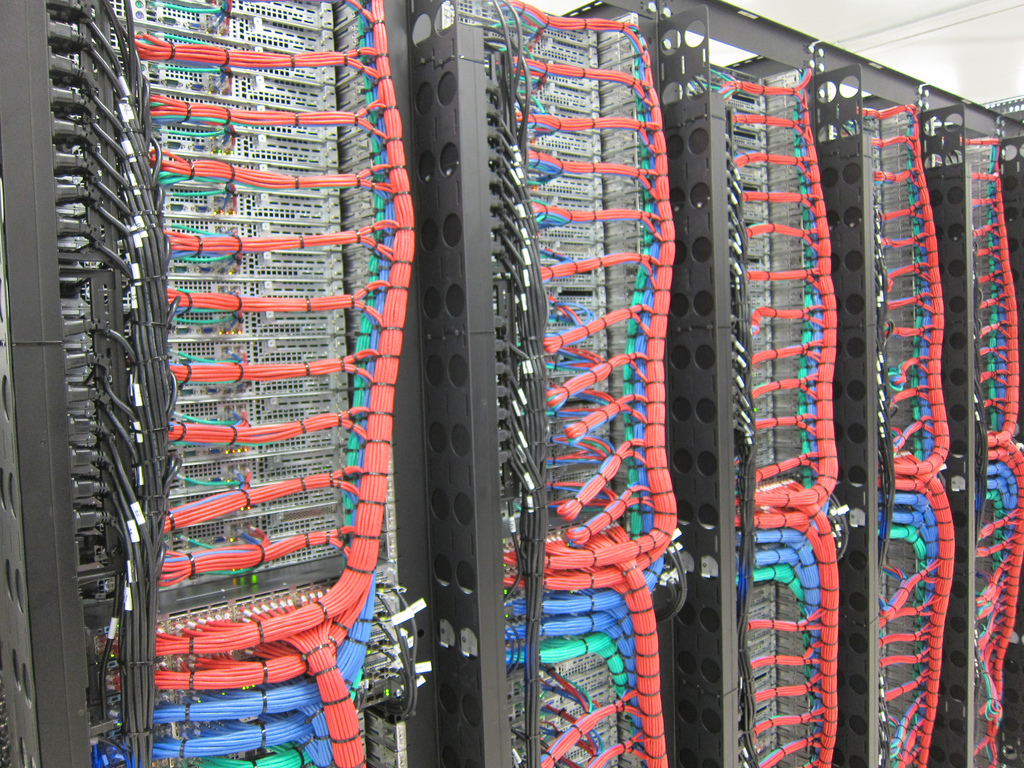

If you can create a port forward in your router and run stuff at your house what’s the point of a relay then? Just expose the ports that Syncthing uses and configure your client to connect to it using your dynamic DNS. No public or private relays are required.

- Port forward the following in your router to the local Syncthing host, any client will be able to connect to it directly:

- Port 22000/TCP: TCP based sync protocol traffic

- Port 22000/UDP: QUIC based sync protocol traffic

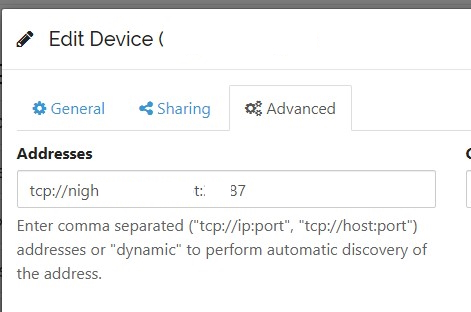

- Go into the client and edit the home device. Set it to connect using the dynamic DNS directly:

For extra security you may change the Syncthing port, or run the entire thing over a Wireguard VPN like I also do.

Note that even without the VPN all traffic is TLS protected.

I’ve been using ansible as well and it’s great.